Administrator panels

The administrator panels allow you ton configure OKA and its plugins.

To access these panels, you must be an admin user.

An admin user is created during OKA installation. Other users can be created from the Users section in this administrator panel (see Users).

You can access OKA administration panels here: https://<OKA SERVER>/admin/ or using the admin panel button in OKA navigation bar.

The VIEW SITE link at the top right of the administrator panel allows you to go back to OKA interface.

Analyze-IT - Filters

In this panel, you fill find all filter profiles created by the users from OKA interface (see Save filters as profile). For more details about the filters see Filters.

CloudSHaper

Conf cloud reports

To use the cost consumption plugin of CloudSHaper, you must define in this panel the AWS bucket where the consumption reports are stored. You can also filter the reports for a specific account or on a delimited period.

Data manager

Cluster configurations

In this panel, you can update the number of nodes and cores of your cluster.

The minimum memory per job is used to identify memory-bound nodes (see Load > Occupancy). It is the minimum available memory needed by a node to be able to run a job. If not provided, it will be automatically computed to the median of requested memory by users in the last accounting logs ingested.

You can also modify your cluster layout (i.e. the physical position of the racks in the room) by setting racks_layout.

The layout must follow this format:

[{"row": "A", "rack_number": [1, 2, 3, 4, 5, 6, 7], "rack_map": ['x1y1', 'x2y2', 'x3y3', 'x4y4', 'x5y5', 'x6y6', 'x7y7', '', '', '']},

{"row": "B", "rack_number": [8, 9, 10, 11, 12, 13, 14, 15], "rack_map": ['', '', 'x8y8', 'x9y9', 'x10y10', 'x11y11', 'x12y12','x13y13', 'x14y14', 'x15y15']}]

Where row is the name of the row, rack_number is a list of racks in this row and rack_map is a list of rack positions within this row.

Two subsequent rows are considered to face each other. In the example above, there are 10 positions in each row and some of them are empty meaning for example that there is no rack in front of rack#1.

In this panel there is also an option to specify a Timezone.

For the time being, this is only used in case data are retrieved directly by calling to a Slurm scheduler to avoid having data retrieved in UTC when you expect them in your own timezone.

Conf apis

In this panel, you can update configurations for available APIs output and input capabilities.

This include for example, ouput_db objects used to configure access to your Elasticsearch database.

Conf files

In this panel, you can update the path to your input files (e.g. accounting file) and the output directories where to save your trained models.

Conf job schedulers

In this panel, you can update the configuration of your Jobscheduler (version, how to connect…).

See also Clusters management for how to create a new cluster and manually upload job scheduler logs through the web interface.

Conf pipelines

In this panel, you can associate filters (see Filters) and data enhancers (see Data enhancers) to each pipeline.

Interface configuration

In this panel, you can configure OKA’s interface globally and at the plugin level:

Global: Choose a currency to use for the costs.

Resources plugin: Provide the bins list to use for nodes, GPUs, cores and memory barcharts.

Load plugin > Cores occupancy graph: Select the traces to display by default.

Pipelines

You will find here the list of all the pipelines set up in your environment.

Their names can be used to execute each pipeline manually using the run API (see Usage).

Pipelines can also be edited if you for example wish to change the queue used when executed through a task.

Periodic Tasks

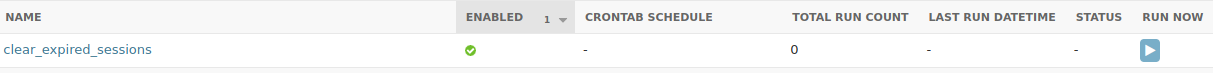

In this panel you can access all available tasks provided by OKA. You will be able to see basic information regarding those tasks at a glance. Tasks can be edited if you for example wish to enable/disable them or update the CRONTAB value associated with it.

ENABLED: Show if the task is setup to run automatically or not.CRONTAB SCHEDULE: Show the current schedule on which the task will run if enabled.

Warning

Be careful if you wish to delete a Crontabs object as it would automatically remove all associated Periodic tasks and you would have to recreate manually.

STATUS: Gives you information regarding the execution of the process handled by the scheduled task. This is not the periodic task status but one for the actual method that ran within the task. If the periodic task is attached to a pipeline then, the status shown is an information telling you if the pipeline execution was successful or not.RUN NOW: Allows you to request the immediate execution of the task.

Meteo Cluster - Conf

In this panel, you can configure the Meteo Cluster pipelines. Available configuration parameters are:

Algorithms global parameters

algorithm: Algorithm used by the predictor.LSTMfor Long Short-Term Memory is a neural network with feedback connections.NeuralProphetis a neural network based time-series model, inspired by Facebook Prophet and AR-Net.

epochs: Number of times the model is trained on all training data. Default is1000.epochs_auto: Switchon/off(check/uncheck). Whether to determine automatically the number of epochs to avoid overfitting (check). Possible minimum number of epochs is 10% of the number of epochs. Epochs optimization is done by using an early stopping callback during multiple trainings on train dataset. Default ison.layers: Number of layers of the neural network. Default is2.batch_size: Number of samples propagated through the neural network. Default is32.n_lags: Number of timesteps to consider in the past for each prediction. For example, using14asn_lags, the model will use a sequence of 14 timesteps to predict the 15th one. Default is30.pred_seq: Number of steps ahead to consider when making the predictions. For example, using2aspred_seq, the model will try to predict 2 steps ahead. Default is1.

LSTM algorithm specific parameters

units: Number of (hidden) units of the first layer. The number of units of the following layers will beunitsdivided by the position of the layer (e.g.,units\ 2 for the second layer). Default is96.

NeuralProphet algorithm specific parameters

learning_rate: Maximum learning rate. Default is0.01.learning_rate_auto: Switchon/off(check/uncheck) automatic learning rate calculation. Default ison.n_changepoints: Number of points where the trend rate may along the timeserie. Default is30changepoints.ar_reg: Regularization in AR-Net.0induces complete sparsity and1imposes no regularization at all. Default is0.5.

To filter the input data used for the model training, you will have to update each Meteo Cluster pipeline configuration (see Conf pipelines).

Predict jobs - Conf

In this panel, you can configure the Predict-IT pipelines. Available configuration parameters are:

Train / test split

split_type: Split train and test datasestschronologicallyor based on trainsize. Whenchronologically(default), latest jobs are kept for testing (this mimics real production mode). Whensize, jobs are shuffled and split.split_chrono: String representing the last chunk of data (chronologically) in the logs which should be used for testing. You can provide it in terms ofxmonths (xm), weeks (xw), days (xd), hours (xH), minutes (xM), or seconds (xS). For example, if you want to save the last month for testing (while using the rest of the data for training), usesplit="1m". By default, it will keep the last 20% of jobs for testing. This is used only ifsplit_typeischronologically.split_size: Test dataset size (0to1). Default is0.2to use 20% of jobs for testing. This is used only ifsplit_typeis bysize.shuffle: Switchon/off(check/uncheck) data shuffling. Default ison. When splittingchronologically, datasets are suffled after splitting. When splitting bysize, the dataset is shuffled before splitting.

Cross-validation

cv: Switchon/off(check/uncheck) cross-validation. Ifon, it evaluates the predictor performance by cross-validation on train dataset. The displayed score is the one set bycv_scoring_multiclass. Turning itoffmight be useful to reduce overall calculation time. Default isoff.cv_fold_strategy: Strategy to perform the fold of cross-validation ifcvison.kfoldis the standard cross-validation that splits the dataset into k consecutive folds with shuffling.Using

stratified, the folds are made by preserving the percentage of samples for each bin.

cv_scoring_multiclass: Score computed at each cross-validation iteration.cv_n_splits: Number of folds for cross-validation. Default is4.

Algorithms global parameters

algorithms: Algorithm used by the predictor.decision tree classifieris a flowchart-like tree structure where an internal node represents feature, the branch represents a decision rule, and each leaf node represents the outcome.random forest classifieris a meta estimator that fits a number of decision tree classifiers on various sub-samples of the dataset.extra-trees classifieris a meta estimator that fits a number of randomized decision trees (a.k.a. extra-trees) on various sub-samples of the dataset.adaboost classifieris a meta-estimator that begins by fitting a classifier on the dataset and then fits additional copies of the classifier on the same dataset but with the weights of incorrectly classified instances adjusted such that subsequent classifiers focus more on difficult cases.gradient boosting for classificationis the AdaBoosting method combined with weighted minimization, after which the classifiers and weighted inputs are recalculated. The objective of Gradient Boosting classifiers is to minimize the loss similarly to gradient descent in a neural network.

optimize: Switchon/off(check/uncheck) automatic optimization of model hyperparameters. Bayesian optimization using Gaussian Processes is used to optimize the hyperparameters by maximizing the f1-score (macro). Default isoff. Depending on the selectedalgorithms, different hyperparameters will be optimized:decision tree classifier:max_depth,max_features,min_samples_leaf,min_samples_splitrandom forest classifier:bootstrap,max_depth,max_features,min_samples_leaf,min_samples_split,n_estimatorsextra-trees classifier:bootstrap,max_depth,max_features,min_samples_leaf,min_samples_split,n_estimatorsadaboost classifier:learning_rate,n_estimatorsgradient boosting for classification:max_depth,max_features,min_samples_leaf,min_samples_split,n_estimators,learning_rate

optimize_n_models: Number of combinations to test during hyperparameters optimization. One combination of hyperparameters corresponds to one model. Minimum is11models.algo_max_features: Size of the random subsets of features to consider when splitting a node.sqrt: Square root of total number of features.log2: Base-2 logarithm of total number of features.None: No feature subset selection is performed.auto: Same assqrt(default).

algo_n_estimators: The number of trees in the forest / The number of boosting stages to perform (gradient boosting for classification). Default is100estimators.algo_max_depth: Maximum depth of the tree (number of splits). If the number of splits is too low, the model underfits the data and if it is too high the model overfits. Default isNone, meaning no limitation is applied.algo_min_samples_split: The minimum number of samples required to split an internal node of the tree. Default is2.algo_min_samples_leaf: The minimum number of samples required to be at a leaf/external node. Default is1sample per leaf.algo_bootstrap: Switchon/off(check/uncheck). Whether bootstrap samples are used when building trees. If unchecked, the whole dataset is used to build each tree. Default ison.algo_random_state: Controls the random seed. Default is12345.algo_criterion: The function to measure the quality of a split.gini: Gini impurity (probability of a sample to be classified incorrectly).entropy: Shannon information gain.

algo_n_jobs: Number of jobs to run in parallel. Default isNone, meaning 1 job.-1means using all available cores.algo_class_weight: Weights associated with bins. IfNone, all classes are supposed to have weight one.The

balancedmode uses the target values to automatically adjust weights inversely proportional to bins frequencies in the input data.The

balanced_subsamplemode is the same asbalancedexcept that weights are computed based on the bootstrap sample for every tree grown.

algo_learning_rate: Weight applied to each classifier at each boosting iteration.

AdaBoost algorithm specific parameters

algo_base_estimator: The base estimator from which the boosted ensemble is built.decision tree classifierorrandom forest classifier.algo_ada_algorithm: Multiclass Adaboost function.SAMME(Stagewise Additive Modeling) orSAMME.R. TheSAMME.Ralgorithm typically converges faster thanSAMME, achieving a lower test error with fewer boosting iterations. Default isSAMME.R.

To filter the input jobs used for the model training (to define a particular workload for example), you have to update each Predict-IT pipeline configuration (see Conf pipelines).

Users

In this panel, you can create, update or delete a user. Three types of users can be created depending on their permissions:

A regular user: has no advanced permission, will have access to OKA interface only.

A

Staffuser: can access OKA interface and has permission to connect to the admin panel to clear the caches.A

Superuser/adminuser (MUST BEStaff): can access OKA interface and has permission to connect to the whole admin panel.

Upon creation of a new user, you will need to activate the user in this panel.

Clear cache

Caches are used to temporarily cache the result of OKA APIs to give you faster results if you try to access the same data many times in a row. Caches are automatically cleared:

On a time basis, based on

CACHE_DURATIONvariable set in the${OKA_INSTALL_DIR}/conf/oka.conf(every day by default)At pipeline execution (accounting logs ingestion, Predict-IT training…)

If you witness some inconsistency in the data shown by OKA interface, you can use this panel to manually reset the caches. We advise you to clear both fallback and main caches.

Rosetta

Use this panel to customize the terms used by OKA interface (titles, traces names…). The eligible terms have been chosen by OKA development team. If you wish to be able to customize other terms, please contact UCit Support or your OKA reseller.

On Rosetta interface, use the PROJECT filter to identify the custom applications.

For each application, you will be able to customize the eligible terms.

Click on SAVE AND TRANSLATE NEXT BLOCK to save your changes.

Restart OKA server and refresh OKA interface to see your modifications

(be careful that your browser cache doesn’t show you the unmodified OKA interface).